很大的压缩文件计算行数

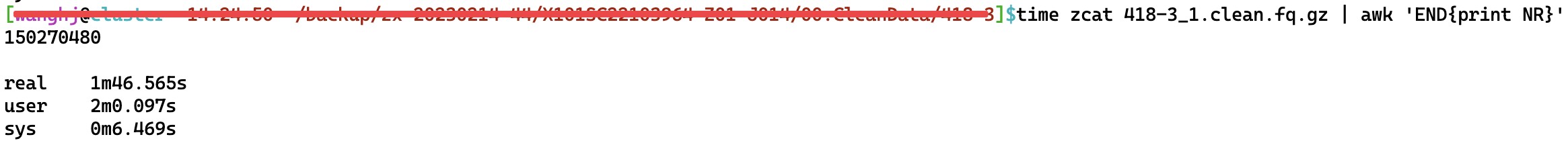

这个其实很多办法都可以做到,基本就是靠zcat后接awk,sed,或者wc命令,不过速度差强人意,比如这个

awk应该是这几个里最快的,但一个3个G左右的文件仍然运行了接近2分钟,考虑到还有几个30个...

这个其实很多办法都可以做到,基本就是靠zcat后接awk,sed,或者wc命令,不过速度差强人意,比如这个

awk应该是这几个里最快的,但一个3个G左右的文件仍然运行了接近2分钟,考虑到还有几个30个G的文件需要测

磨刀不误砍柴工,我就写了个多线程的脚本,为了速度,就用了c++

#include <iostream>

#include <fstream>

#include <sstream>

#include <string>

#include <thread>

#include <vector>

#include <atomic>

#include <zlib.h>

#include <algorithm>

#include <sys/sysinfo.h>

#include <chrono>

std::atomic<unsigned long long> line_count(0);

void count_lines(const std::string& chunk, int chunk_size) {

unsigned long long local_count = 0;

for (int i = 0; i < chunk_size; ++i) {

if (chunk[i] == '\n') {

++local_count;

}

}

line_count += local_count;

}

int main(int argc, char* argv[]) {

if (argc != 2) {

std::cerr << "Usage: " << argv[0] << " <input_file>" << std::endl;

return 1;

}

int max_threads = std::thread::hardware_concurrency() * 0.75;

struct sysinfo sys_info;

sysinfo(&sys_info);

int BUFFER_SIZE = sys_info.freeram * 0.25 / max_threads;

BUFFER_SIZE = (BUFFER_SIZE / max_threads) * max_threads;

gzFile input_file = gzopen(argv[1], "rb");

if (!input_file) {

std::cerr << "Error opening file." << std::endl;

return 1;

}

std::vector<std::thread> threads;

std::string buffer(BUFFER_SIZE, 0);

int bytes_read = 0;

auto start_time = std::chrono::high_resolution_clock::now();

while ((bytes_read = gzread(input_file, &buffer[0], BUFFER_SIZE)) > 0) {

threads.push_back(std::thread(count_lines, buffer, bytes_read));

if (threads.size() >= max_threads) {

for (auto& t : threads) {

t.join();

}

threads.clear();

}

}

for (auto& t : threads) {

t.join();

}

auto end_time = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::seconds>(end_time - start_time).count();

gzclose(input_file);

std::cout << "Number of lines: " << line_count << std::endl;

std::cout << "Time taken: " << duration << " seconds" << std::endl;

return 0;

}

这需要一个需要支持C++11或更高版本的编译器,比如我是gcc-v12.2,编译命令为

g++ -std=c++11 -o gz_rownum gz_rownum.cpp -lz -pthread

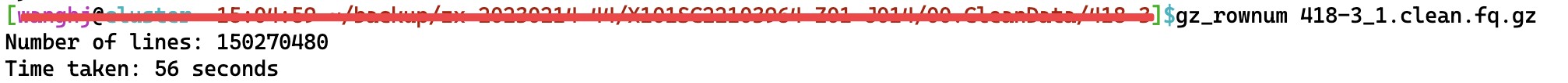

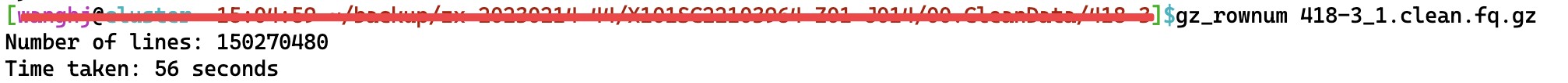

编译的脚本还有个好处,丢你的环境目录里,在哪都可以直接运行,执行一下看看效果

时间节省了一半,毕竟是大量i/o的工作,不可能太夸张,如果服务器参数好一点,效果应该会更明显

- 发表于 2023-05-05 15:22

- 阅读 ( 1283 )

- 分类:软件工具

你可能感兴趣的文章

相关问题

0 条评论

请先 登录 后评论